DuMux applications

Example application

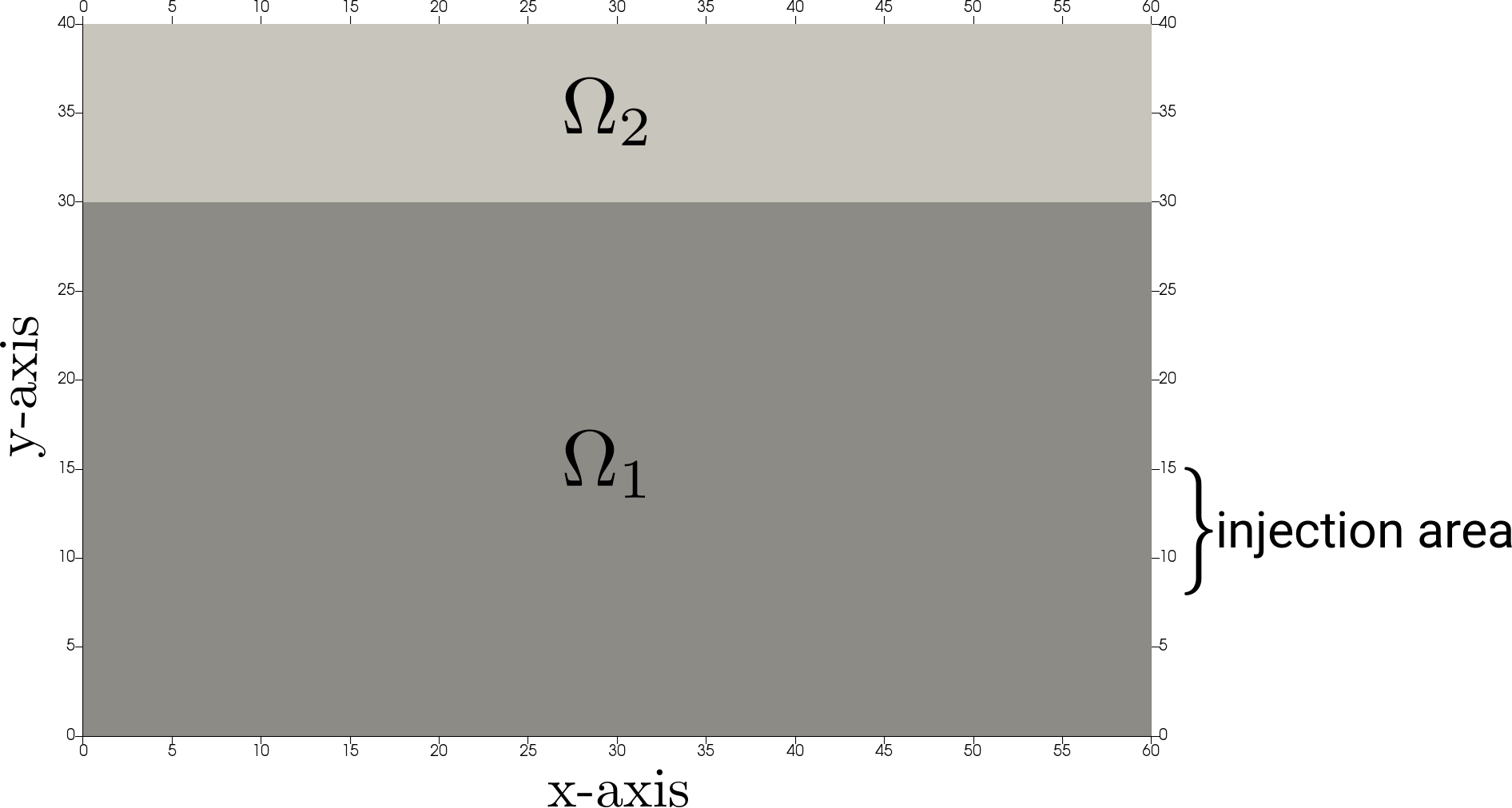

Simulation goal

Gas injection / immiscible two phase flow

Mass balance equations for two fluid phases: \[ \begin{aligned} \frac{\partial \left(\phi \varrho_\alpha S_\alpha \right)}{\partial t} + \nabla \cdot \left(\varrho_\alpha \boldsymbol{v}_\alpha \right) - q_\alpha = 0, \quad \alpha \in \lbrace w, n \rbrace. \end{aligned} \]

Momentum balance equations (multiphase-phase Darcy’s law): \[ \begin{aligned} \boldsymbol{v}_\alpha = -\frac{k_{r\alpha}}{\mu_\alpha} \mathbf{K} \left(\nabla\, p_\alpha - \varrho_{\alpha} \mathbf{g} \right), \quad \alpha \in \lbrace w, n \rbrace. \end{aligned} \]

Gas injection / immiscible two phase flow

\[ \begin{aligned} \frac{\partial \left(\phi \varrho_\alpha S_\alpha \right)}{\partial t} - \nabla \cdot \left( \varrho_\alpha \frac{k_{r\alpha}}{\mu_\alpha} \mathbf{K} \left(\nabla\, p_\alpha - \varrho_{\alpha} \mathbf{g} \right) \right) - q_\alpha = 0 \end{aligned} \]

- \(p_w\), \(p_n\): wetting and non-wetting fluid phase pressure

- \(\varrho_\alpha\), \(\mu_\alpha\): fluid phase density and dynamic viscosity

- \(\phi\), \(\mathbf{K}\), \(k_{r\alpha}\): porosity, intrinsic permeability, relative permeability

- \(S_w\), \(S_n\): wetting and non-wetting saturation

- \(\mathbf{g}\), \(q_\alpha\): gravitational potential, source term

Gas injection / immiscible two phase flow

\[ \begin{aligned} \frac{\partial \left(\phi \varrho_\alpha S_\alpha \right)}{\partial t} - \nabla \cdot \left( \varrho_\alpha \frac{k_{r\alpha}}{\mu_\alpha} \mathbf{K} \left(\nabla\, p_\alpha - \varrho_{\alpha} \mathbf{g} \right) \right) - q_\alpha = 0 \end{aligned} \]

- Constitutive relations: \(p_n := p_w + p_c\), \(p_c := p_c(S_w)\), \(k_{r\alpha}\) = \(k_{r\alpha}(S_w)\)

- Physical constraint: \(S_w + S_n = 1\)

- Primary variables: \(p_w\), \(S_n\) (wetting phase pressure, non-wetting phase saturation)

A DuMux application

A DuMux application

The source code for a typical DuMux application consists of:

- Property specializations (e.g.

properties.hh) - Problem class definition (e.g.

problem.hh) and often a spatial parameter class definition (e.g.spatialparams.hh) - A parameter configuration file (e.g.

params.input) - The main program (e.g.

main.cc) CMakeLists.txt(CMake build system configuration)

Property specializations

Properties

“Compile-time configuration”

“Tell DuMux what type of classes to use”

Details on properties in Part II: Property system

*properties*.hh

Create tag, choose model and discretization scheme:

namespace Dumux::Properties::TTag {

// the application type tag (choose any name)

struct Injection2pCC { using InheritsFrom = std::tuple<TwoP, CCTpfaModel>; };

} // end namespace Dumux::Properties::TTagFile:

dumux-course/exercises/exercise-basic/properties2p.hh

Specialize the Grid property for tag

TTag::Injection2pCC to set the grid type:

namespace Dumux::Properties {

template<class TypeTag>

struct Grid<TypeTag, TTag::Injection2pCC>

{ using type = Dune::YaspGrid<2>; }; // Yet Another Structured Parallel Grid

} // end namespace Dumux::PropertiesFile:

dumux-course/exercises/exercise-basic/properties2p.hh

Specialize the Problem property to set the problem

type:

namespace Dumux::Properties {

template<class TypeTag>

struct Problem<TypeTag, TTag::Injection2pCC>

{ using type = InjectionProblem2P<TypeTag>; };

} // end namespace Dumux::PropertiesFile:

dumux-course/exercises/exercise-basic/properties2p.hh

The problem class InjectionProblem2P is discussed in one

of the following sections.

Specialize the SpatialParams property to set the spatial

parameter type:

namespace Dumux::Properties {

template<class TypeTag>

struct SpatialParams<TypeTag, TTag::Injection2pCC>

{

using GridGeometry

= GetPropType<TypeTag, Properties::GridGeometry>;

using Scalar

= GetPropType<TypeTag, Properties::Scalar>;

using type = InjectionSpatialParams<GridGeometry, Scalar>;

};

} // end namespace Dumux::PropertiesFile:

dumux-course/exercises/exercise-basic/properties2p.hh

Specialize the FluidSystem property to set the fluid

system type:

namespace Dumux::Properties {

template<class TypeTag>

struct FluidSystem<TypeTag, TTag::Injection2pCC>

{

using Scalar = GetPropType<TypeTag, Properties::Scalar>;

using Policy = FluidSystems::H2ON2DefaultPolicy<

/*fastButSimplifiedRelations=*/true>;

using type = FluidSystems::H2ON2<Scalar, Policy>;

};

} // end namespace Dumux::PropertiesFile:

dumux-course/exercises/exercise-basic/properties2p.hh

Problem definition

Problem

A “problem” class in DuMux implements a specific scenario:

*problem*.hh

template<class TypeTag>

class InjectionProblem2P : public PorousMediumFlowProblem<TypeTag>

{

// - boundary conditions

// - initial conditions

// - source terms

// - scenario name (for output)

}File:

dumux-course/exercises/exercise-basic/injection2pproblem.hh

Inherit from base class PorousMediumFlowProblem, only

override scenario-specific functions (static polymorphism).

The boundary condition types:

BoundaryTypes boundaryTypesAtPos(const GlobalPosition &globalPos) const

{

BoundaryTypes bcTypes;

// Use Dirichlet boundary conditions on the left

if (globalPos[0] < eps_)

bcTypes.setAllDirichlet();

// Use Neumann boundary conditions on the rest of the boundary

else

bcTypes.setAllNeumann();

return bcTypes;

}File:

dumux-course/exercises/exercise-basic/injection2pproblem.hh

Dirichlet boundary condition values (only called on Dirichlet boundaries)

PrimaryVariables dirichletAtPos(const GlobalPosition& globalPos) const

{ return initialAtPos(globalPos); }File:

dumux-course/exercises/exercise-basic/injection2pproblem.hh

PrimaryVariables is an array of primary variables (here,

the size of the array is 2).

More general Dirichlet boundary functions:

File:

dumux/dumux/common/fvproblem.hh

File:

dumux/dumux/common/fvproblem.hh

Neumann boundary condition values / boundary fluxes (only called on Neumann boundaries)

NumEqVector neumannAtPos(const GlobalPosition& globalPos) const

{

NumEqVector values(0.0);

if (injectionActive() && onInjectionBoundary(globalPos))

{

values[Indices::conti0EqIdx + FluidSystem::N2Idx] = -1e-4;

values[Indices::conti0EqIdx + FluidSystem::H2OIdx] = 0.0;

}

return values;

}File:

dumux-course/exercises/exercise-basic/injection2pproblem.hh

NumEqVector is an array of equations (here, the size of

the array is 2).

More general Neumann boundary function:

template<class ElementVolumeVariables, class ElementFluxVariablesCache>

NumEqVector neumann(const Element& element,

const FVElementGeometry& fvGeometry,

const ElementVolumeVariables& elemVolVars,

const ElementFluxVariablesCache& elemFluxVarsCache,

const SubControlVolumeFace& scvf) const

{

...

}File:

dumux/dumux/common/fvproblem.hh

Initial conditions:

PrimaryVariables initialAtPos(const GlobalPosition& globalPos) const

{

PrimaryVariables values(0.0);

const Scalar densityW

= FluidSystem::H2O::liquidDensity(temperature(), 1.0e5);

const Scalar pw

= 1.0e5 - densityW*this->gravity()[dimWorld-1]

*(aquiferDepth_ - globalPos[dimWorld-1]);

values[Indices::pressureIdx] = pw;

values[Indices::saturationIdx] = 0.0;

return values;

}File:

dumux-course/exercises/exercise-basic/injection2pproblem.hh

Source/sink terms:

File:

dumux-course/exercises/exercise-basic/injection2pproblem.hh

More general source/sink function:

template<class ElementVolumeVariables>

NumEqVector source(const Element &element,

const FVElementGeometry& fvGeometry,

const ElementVolumeVariables& elemVolVars,

const SubControlVolume &scv) const

{

...

}File:

dumux/dumux/common/fvproblem.hh

Spatial Parameters definition

Spatial parameters

*spatialparams*.hh

template<class FVGridGeometry, class Scalar>

class InjectionSpatialParams

: public FVPorousMediumFlowSpatialParamsMP<

FVGridGeometry, Scalar,

InjectionSpatialParams<FVGridGeometry, Scalar>

> {

// parameters that are typically position-dependent

// e.g. porosity, permeability

};File:

dumux-course/exercises/exercise-basic/injection2pspatialparams.hh

Inherit from FVPorousMediumFlowSpatialParamsMP where

FV: finite volumes, MP: multi-phase flow.

A function returning the intrinsic permeability \(K\):

auto permeabilityAtPos(const GlobalPosition& globalPos) const

{

if (isInAquitard_(globalPos))

return aquitardK_;

return aquiferK_;

}File:

dumux-course/exercises/exercise-basic/injection2pspatialparams.hh

A function returning the porosity:

Scalar porosityAtPos(const GlobalPosition& globalPos) const

{

// here, either aquitard or aquifer porosity are returned

if (isInAquitard_(globalPos))

return aquitardPorosity_;

return aquiferPorosity_;

}File:

dumux-course/exercises/exercise-basic/injection2pspatialparams.hh

A function returning a parameterized constitutive law describing fluid-matrix interactions (\(p_c(S_w)\), \(k_r(S_w)\)):

auto fluidMatrixInteractionAtPos(const GlobalPosition& globalPos) const

{

if (isInAquitard_(globalPos))

return makeFluidMatrixInteraction(aquitardPcKrSwCurve_);

return makeFluidMatrixInteraction(aquiferPcKrSwCurve_);

}File:

dumux-course/exercises/exercise-basic/injection2pspatialparams.hh

Set the (constant) temperature field in the domain:

File:

dumux-course/exercises/exercise-basic/injection2pspatialparams.hh

Runtime parameters

Runtime parameters

Dune INI syntax ([Group] and Key = Value

pairs)

params.input

File:

dumux/test/porousmediumflow/2p/nonisothermal/params.input

See Part I: Runtime parameters for details.

Main program

- Each problem has one main file (

test_name.ccormain.cc) - Here:

2pmain.ccand2pnimain.cc - Contains the

mainfunction (mandatory in C/C++ programs)

Main function

Calling Dumux::initialize is mandatory at the beginning

of each DuMux program to make sure the environment is

correctly set up.

int main(int argc, char** argv)

{

// initialize MPI+X backends (mandatory)

Dumux::initialize(argc, argv);File:

dumux-course/exercises/exercise-basic/2pmain.cc

Parse runtime parameters:

File:

dumux-course/exercises/exercise-basic/2pmain.cc

See Part I: Runtime parameters for details.

Define an alias for the test problem type tag

File:

dumux-course/exercises/exercise-basic/2pmain.cc

The tag (tag alias) is used to extract types and values via the property system (details on properties in Part II: Property system).

Create the computational grid:

// create a grid (take parameters from input file)

GridManager<GetPropType<TypeTag, Properties::Grid>> gridManager;

gridManager.init();

// obtain the grid and a grid view

const auto& grid = gridManager.grid();

const auto& leafGridView = grid.leafGridView();File:

dumux-course/exercises/exercise-basic/2pmain.cc

More details on the grid in Part I: Grid.

GridGeometry and Problem instantiation:

// create the finite volume grid geometry

// (represents the chosen discretization scheme)

using GridGeometry = GetPropType<TypeTag, Properties::GridGeometry>;

auto gridGeometry = std::make_shared<GridGeometry>(leafGridView);

// the problem (initial and boundary conditions)

using Problem = GetPropType<TypeTag, Properties::Problem>;

auto problem = std::make_shared<Problem>(gridGeometry);File:

dumux-course/exercises/exercise-basic/2pmain.cc

Initial solution and secondary variables:

// the solution vector

using SolutionVector

= GetPropType<TypeTag, Properties::SolutionVector>;

SolutionVector x(gridGeometry->numDofs());

// the grid variables

using GridVariables = GetPropType<TypeTag, Properties::GridVariables>;

auto gridVariables

= std::make_shared<GridVariables>(problem, gridGeometry);

gridVariables->init(x);File:

dumux-course/exercises/exercise-basic/2pmain.cc

Setting up VTK output:

// initialize the vtk output module

VtkOutputModule<GridVariables, SolutionVector>

vtkWriter(*gridVariables, x, problem->name());

// optionally add a velocity post-processor

using VelocityOutput

= GetPropType<TypeTag, Properties::VelocityOutput>;

vtkWriter.addVelocityOutput(

std::make_shared<VelocityOutput>(*gridVariables));

// add input/output defaults for the chosen model

using IOFields = GetPropType<TypeTag, Properties::IOFields>;

IOFields::initOutputModule(vtkWriter);File:

dumux-course/exercises/exercise-basic/2pmain.cc

Some typical main function structures:

- Steady-state linear problems

- Steady-state non-linear problems

- Time-dependent non-linear problems

Assembling the system for a steady-state linear problem:

// the assembler for stationary problems

using Assembler = FVAssembler<TypeTag, DiffMethod::numeric>;

auto assembler = std::make_shared<Assembler>(

problem, gridGeometry, gridVariables);

// the discretization matrices for stationary linear problems

using JacobianMatrix

= GetPropType<TypeTag, Properties::JacobianMatrix>;

auto A = std::make_shared<JacobianMatrix>();

auto r = std::make_shared<SolutionVector>();

assembler->setLinearSystem(A, r);

assembler->assembleJacobianAndResidual(x);File:

dumux-course/exercises/exercise-mainfile/exercise1pamain.cc

and solve it using an iterative method:

using LinearSolver = ILUBiCGSTABIstlSolver<

LinearSolverTraits<GridGeometry>,

LinearAlgebraTraitsFromAssembler<Assembler>>;

auto linearSolver = std::make_shared<LinearSolver>(

gridGeometry->gridView(), gridGeometry->dofMapper());

(*r) *= -1.0; // solve Ax = -r to save update and copy

linearSolver->solve(*A, x, *r);File:

dumux-course/exercises/exercise-mainfile/exercise1pamain.cc

Simplified code for steady-state linear problem using

Dumux::LinearPDESolver

auto assembler = ...; // construct as before

auto linearSolver = ...; // construct as before

using Solver = LinearPDESolver<Assembler, LinearSolver>;

Solver solver(assembler, linearSolver);

// assemble & solve

solver.solve(x);File:

dumux/test/porousmediumflow/1p/rootbenchmark/main.cc

For steady-state non-linear problems, use

Dumux::NewtonSolver:

auto assembler = ...; // construct as before

auto linearSolver = ...; // construct as before

using Solver = NewtonSolver<Assembler, LinearSolver>;

Solver solver(assembler, linearSolver);

// linearize & solve

solver.solve(x);File:

dumux-course/exercises/exercise-mainfile/exercise1pbmain.cc

For time-dependent problems, pass a TimeLoop instance

and a solution vector carrying the solution on the previous/initial time

level to the assembler:

SolutionVector xOld = x;

auto timeLoop = std::make_shared<TimeLoop<Scalar>(0.0, dt, tEnd);

timeLoop->setMaxTimeStepSize(maxDt);

using Assembler = FVAssembler<TypeTag, DiffMethod::numeric>;

auto assembler = std::make_shared<Assembler>(

problem, gridGeometry, gridVariables,

timeLoop, xOld // <-- new arguments

);

// assemble linear/non-linear problem as before

// ...File:

dumux-course/exercises/exercise-basic/2pmain.cc

The skeleton of a time loop:

File:

dumux-course/exercises/exercise-basic/2pmain.cc

Solving a time step:

// time loop

timeLoop->start(); do

{

// linearize & solve

nonLinearSolver.solve(x, *timeLoop);

// make the new solution the old solution

xOld = x;

gridVariables->advanceTimeStep();File:

dumux-course/exercises/exercise-basic/2pmain.cc

Advancing the time loop to the next step:

// advance to the time loop to the next step

timeLoop->advanceTimeStep();

// report statistics of this time step

timeLoop->reportTimeStep();

// set new dt as suggested by the newton solver

timeLoop->setTimeStepSize(

nonLinearSolver.suggestTimeStepSize(timeLoop->timeStepSize())

);

} while (!timeLoop->finished());

// print final report

timeLoop->finalize(leafGridView.comm());File:

dumux-course/exercises/exercise-basic/2pmain.cc

Build system (CMake)

Typical C++ build steps

- configure

- compile

- install (copy programs to some location)

Step 3 is usually skipped by DuMux developers. But you can of course “install” Dune modules and DuMux.

Build system - what is CMake?

- Open-source build system tool developed by KITware

- Offers a one-tool-solution to all building tasks, like configuring, building, linking, testing and packaging

- Is portable and supports cross-platform compilation

- Build file generator: Support various backends called generators (default: GNU Makefiles)

- Control files written in the CMake language

Build system - CMakeLists.txt

- Every directory in a project contains a file called

CMakeLists.txt, which is written in the CMake language - Upon configure, the top-level

CMakeLists.txtis executed - Subfolders are included with the

add_subdirectory(...)function

Build system - configuring

- Configure build-time compiler parameters / linking information

- Create “targets” that can be build to create executables

- Find and link external libraries / software dependencies

Build system - dunecontrol

- For Dune, CMake is triggered via the

dunecontrolscript

- useful commands:

configure: configure libraries, variables, paths, filesmake all: build librariesall: includesconfigureandmake all

Build system - dunecontrol

- For Dune, CMake is triggered via the

dunecontrolscript

- useful options:

--opts=<optionfile.opts>specify e.g. compiler flags; DuMux providesdumux/cmake.opts.--build-dir=<build directory>specify path for out-of-source build; Default: every module contains its own build directory calledbuild-cmake/--only <module>only apply command to a specific module/s (comma-separated list)

Build system - dunecontrol

You may have to reconfigure (rerun dunecontrol) whenever

a dependency changes or a Dune library is updated. CMake caches. To

reconfigure, you may need to remove the cache first:

removes the CMake cache files from all Dune modules.

(Use --only <module> to only remove caches of

specific modules.)

Build system - basic CMake functions

- Use

add_subdirectoryfor recursively adding subdirectories - Subdirectory must contain a

CMakeLists.txt(can be empty) - Executables are added via

add_executable(<name> source1 [source2 ...]) - Tests are added via

dumux_add_testwhich also add a test executable to the test suite - Symlinks can be added via

dune_symlink_to_source_files

Build system

CMakeLists.txt for an example application: create a test

target exercise_basic_2p by defining a name and source

file:

File:

dumux-course/exercises/exercise-basic/CMakeLists.txt

Build system

Another example: add extra compile definitions and commands (typical for DuMux regression tests):

dumux_add_test(

NAME test_2p_incompressible_box

SOURCES test_2p_fv.cc

COMPILE_DEFINITIONS TYPETAG=TwoPIncompressibleBox

COMMAND ${CMAKE_SOURCE_DIR}/bin/testing/runtest.py

CMD_ARGS --script fuzzy

--files ${CMAKE_SOURCE_DIR}/test/references/lensbox-reference.vtu

${CMAKE_CURRENT_BINARY_DIR}/2p_box-00007.vtu

--command "${CMAKE_CURRENT_BINARY_DIR}/test_2p_incompressible_box

test_2p.input -Problem.Name 2p_box"

)File:

dumux/test/porousmediumflow/2p/incompressible/CMakeLists.txt

Build system

Extra: Create linked parameter file in build-cmake

folder, separately add executable and set compile definitions for an

executable:

dune_symlink_to_source_files(FILES "params.input")

# or: add_input_file_links()

# using tpfa

add_executable(test_2p_incompressible_tpfa EXCLUDE_FROM_ALL main.cc)

target_compile_definitions(

test_2p_incompressible_tpfa PUBLIC TYPETAG=TwoPIncompressibleTpfa

)File:

dumux/test/porousmediumflow/2p/incompressible/CMakeLists.txt

Build system

Useful resources:

- CMake online documentation

- Dune CMake online documentation for CMake functions available with Dune.

Parallelism

Distributed memory parallelism with MPI

- MPI stands for Message Passing Interface

- MPI manages communication between processes that run in parallel

Distributed memory parallelism with MPI

- Main idea is the concept of domain decomposition

- The grid manages the grid partitioning

- Each local subdomain is assembled on an individual process (rank)

- Solvers have to handle decomposed vectors/matrices

- Most solvers in DuMux (iterative Krylov subspace methods based on dune-istl) are capable of MPI parallel solving

Distributed memory parallelism with MPI

Run with:

Distributed memory parallelism with MPI

Partitioned VTK output files:

- Each rank creates its own

*.vtu/*.vtpfile - Combined into

*.pvtu/*.pvtpfiles for each time step *.pvdgroups all*.pvtu/*.pvtpfiles into a time series

Shared-memory parallelism

- Dumux can exploit parallelism with the shared memory model

- Used in the

Dumux::FVAssemblerby default to assemble the residual and stiffness matrix - Is enabled when a multi-threading backend is found

- Backend is selected by

CMAKEduring configuration and stored inDUMUX_MULTITHREADING_BACKEND - Possible examples are

OpenMP,TBB, C++ parallel algorithms, andKokkos

Shared-memory parallelism

Restrict the number of threads

Number of threads can be restricted via the environment variable

DUMUX_NUM_THREADS

Shared-memory parallelism

Multi-threaded assembly

Control use of multi-threading during assembly in input file:

Defaults to true.

Shared-memory parallelism

Set multithreading backend to e.g. OpenMP in

dumux/cmake.opts before building the modules:

Other valid options are:

TBB,Cpp,Kokkos,Serial. If left

empty, it is automatically set by CMake. If no suitable backend is

found, CMake defaults to Serial.

Shared-memory parallelism

During dunecontrol, the chosen backend is displayed in

the output via

Exercises

Exercises

Basic application setup:

- Go to Exercise basic

The main function:

- Go to Exercise main file