Bayesian inference¶

With Bayesian inference we ask the question ‘how does our understanding of the inputs change given some observation of the outputs of the model?’, i.e. we perform an updating step of the prior distributions to posterior, based on some observations.

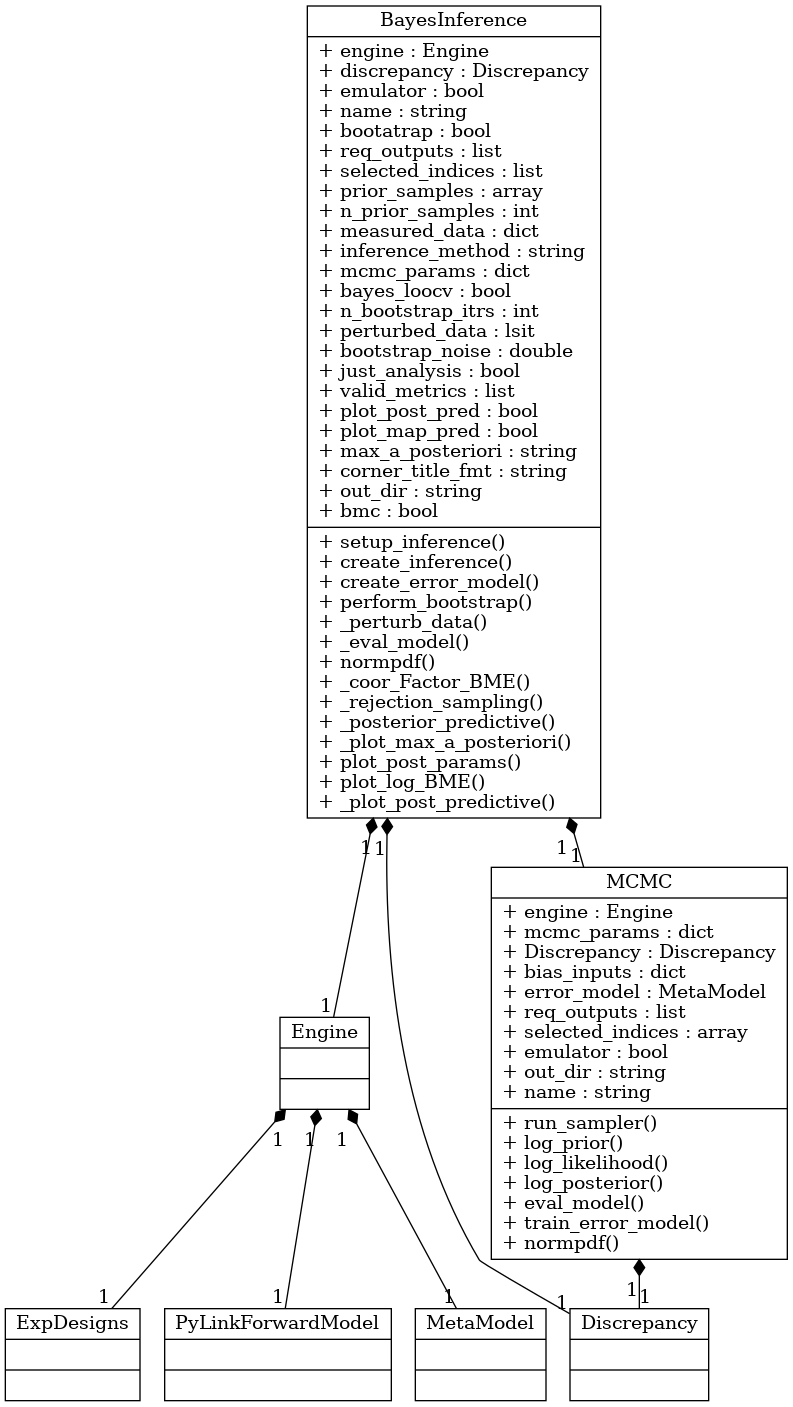

Bayesvalidrox provides a dedicated class to perform this task, bayesvalidrox.bayes_inference.bayes_inference.BayesInference, which uses bayesvalidrox.bayes_inference.post_sampler.PostSampler objects to generate posterior samples.

The observation should be set as Model.observations in the Engine, and an estimation of its uncertainty can be provided as a bayesvalidrox.bayes_inference.discrepancy.Discrepancy object.

In addition to inference, the class bayesvalidrox.bayes_inference.bayes_inference.BayesInference can also be used to validate a model or surrogate model on given observations by estimating the BME.

The observations for validation should be given as Model.observations_valid and can be perturbed using added Gaussian noise, or bootstrapped with leave-one-out cross-validation.

Sampler classes¶

Bayesvalidrox supports two options for generating posterior samples, rejection-sampling and MCMC.

Both of them are given in separate child classes of the bayesvalidrox.bayes_inference.post_sampler.PostSampler class.

Additional parameters for MCMC can be given to bayesvalidrox.bayes_inference.bayes_inference.BayesInference as a dictionary called mcmc_params and can include

prior_samples: initial samplesnsteps: number of stepsnwalkers: number of walkersnburn: length of the burn-inmoves: function to use for the moves, e.g. taken fromemceemp: setting for multiprocessingverbose: verbosity

Example¶

For this example we need to add the following imports.

>>> from bayesvalidrox import Discrepancy, BayesInference

In order to run Bayesian inference we first need to provide an observation.

For this example we take an evaluation of the model on some chosen sample and add the resulting values as Model.observations.

As this expects a 1D-array for each output key, we need to change the format slightly.

>>> true_sample = [[2]]

>>> observation = model.run_model_parallel(true_sample)

>>> model.observations = {}

>>> for key in observation:

>>> if key == 'x_values':

>>> model.observations[key]=observation[key]

>>> else:

>>> model.observations[key]=observation[key][0]

Next we define the uncertainty on the observation with the class bayesvalidrox.bayes_inference.discrepancy.Discrepancy.

For this example we set the uncertainty to be zero-mean gaussian and dependent on the values in the observation, i.e. larger values have a larger uncertainty associated with them.

The parameters contain the variance for each point in the observation.

Warning

For models with only a single uncertain input parameter, numerical issues can appear when the discrepancy is set only depending on the observed data. To resolve this, a small value can be added to the variance of the discrepancy.

>>> obsData = pd.DataFrame(model.observations, columns=model.output.names)

>>> discrepancy = Discrepancy('')

>>> discrepancy.type = 'Gaussian'

>>> discrepancy.parameters = obsData**2+0.01

Now we can initialize an object of class bayesvalidrox.bayes_inference.bayes_inference.BayesInference with all the wanted properties.

This object has to be given our Engine.

If it should use the surrogate during inference, set use_emulator to True, otherwise the model will be evaluated directly.

We also set the defined discrepancy. and set post_plot_pred if posterior predictions should be visualized.

>>> bayes = BayesInference(Engine_)

>>> bayes.use_emulator = True

>>> bayes.discrepancy = discrepancy

>>> bayes.plot = True

In order to run with rejection sampling, we set the inference_method.

>>> bayes.inference_method = 'rejection'

If the sampling should be done with MCMC, then the inference_method is set to 'MCMC' and additional properties are given in mcmc_params.

For this example we use the python package emcee to define the MCMC moves.

>>> bayes.inference_method = 'MCMC'

>>> import emcee

>>> bayes.mcmc_params = {

>>> 'nsteps': 1e4,

>>> 'nwalkers': 30,

>>> 'moves': emcee.moves.KDEMove(),

>>> 'mp': False,

>>> 'verbose': False

>>> }

Then we run the inference, here also saving the results.

>>> bayes.run_inference(save=True)

If the output directory bayes.out_dir is not set otherwise, the outputs are written into the folder Outputs_Bayes_model_Calib.

This folder includes the posterior distribution of the input parameters, as well as the predictions resulting from the mean of the posterior.

For inference with MCMC, chain diagnostics are also written out in the console.

---------------Posterior diagnostics---------------

Mean auto-correlation time: 2.057

Thin: 1

Burn-in: 4

Flat chain shape: (13380, 1)

Mean acceptance fraction*: 0.752

Gelman-Rubin Test**: [1.001]

* This value must lay between 0.234 and 0.5.

** These values must be smaller than 1.1.

--------------------------------------------------

For validation, we can add properties for bootstrap of the observations.

In this example we perturb the observations with Gaussian noise.

>>> bayes.bootstrap_method = 'normal'

>>> bayes.n_bootstrap_itrs = 500

>>> bayes.bootstrap_noise = 0.2

Since this is now a validation setting, the bayesvalidrox.bayes_inference.bayes_inference.BayesInference object will look for validation observations.

We just copy the observations used for inference.

>>> model.observations_valid = model.observations

We can calculate additional metrics for validation, by adding them to valid_metrics.

Options include the Kullback-Leibler Divergence ('KLD') and Information entropy ('inf_entropy').

>>> bayes.valid_metrics = ['kld', 'inf_entropy']

Now we can run the validation, which will return the log-BME of the model or surrogate model on the observations.

>>> log_bme = bayes.run_validation()

If the output directory bayes.out_dir is not set otherwise, the outputs are written into the folder Outputs_Bayes_model_Valid, and the log-BME is visualized.

The additional metrics are stored in bayes.kld and bayes.inf_entropy.