Bayesian multi-model comparison¶

Bayesvalidrox provides three distinct methods to compare sets of models against each other given some observation of the outputs, Bayes’ Factors, model weights and confusion matrices.

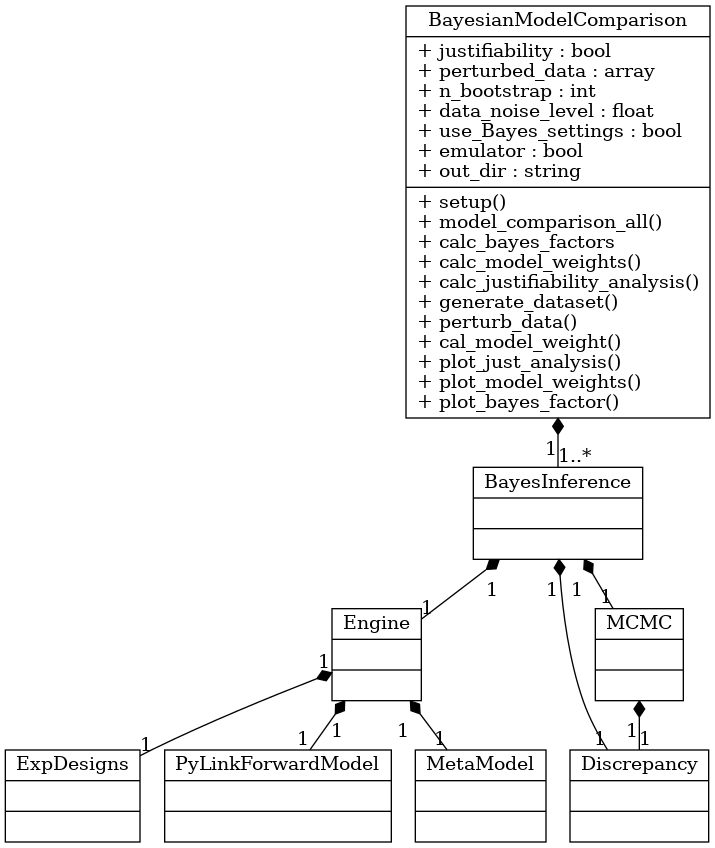

These are contained within the class bayesvalidrox.bayes_inference.bayes_model_comparison.BayesModelComparison and can be called one-at-a-time with their respective functions, or consecutively with the function model_comparison_all().

Example¶

To perform model comparison, we first need to define the set of competing models.

For this, we create an additional model in the file model2.py based on the example model from Models.

>>> def model2(samples, x_values):

>>> poly = samples[0]*np.power(x_values, 3)

>>> outputs = {'A': poly, 'x_values': x_values}

>>> return outputs

Then we can build another surrogate for this model, following the same code as for the surrogate in Training surrogate models.

>>> model2 = PyLinkForwardModel()

>>> model2.link_type = 'Function'

>>> model2.py_file = 'model2'

>>> model2.name = 'model2'

>>> model2.output.names = ['A']

>>> model2.func_args = {'x_values': x_values}

>>> meta_model2 = PCE(Inputs)

>>> meta_model2.pce_reg_method = 'FastARD'

>>> meta_model2.pce_deg = 3

>>> meta_model2.pce_q_norm = 1

>>> exp_design2 = ExpDesigns(Inputs)

>>> exp_design2.n_init_samples = 30

>>> exp_design2.sampling_method = 'random'

>>> engine2 = Engine(meta_model2, model2, exp_design2)

>>> engine2.train_normal()

To perform model comparison we use the class bayesvalidrox.bayes_inference.bayes_model_comparison.BayesModelComparison.

>>> from bayesvalidrox import BayesModelComparison`

We collect the engines that should be compared in a dictionary, and assign them names.

>>> engines = {

>>> "linear": engine,

>>> "degthree": engine2

>>> }

As the comparison uses the class bayesvalidrox.bayes_inference.bayes_inference.BayesInference, we can also set the properties for this class as well.

These are collected in a dictionary and given to the function calls that perform the model comparison.

In this example we use the following settings.

>>> bayes_opts = {

>>> "bootstrap_method": "normal",

>>> "n_prior_samples": 100,

>>> "discrepancy": DiscrepancyOpts,

>>> "use_emulator": True,

>>> "plot": False

>>> }

Then we create an object of class BayesModelComparison.

>>> bmc = BayesModelComparison(model_dict=engines, bayes_opts=bayes_opts)

Now we can run the full model comparison.

>>> output_dict = bmc.model_comparison_all()

The created plots are saved in the folder Outputs_Comparison.